While in quarantine at Marine Corps Air Station Miramar, jet lagged and severely culture shocked, I realized I didn’t have a birding life list. I had just seen my first Anna’s Hummingbird, and some of the ‘Western’ versions of birds I knew (Bluebird, Meadowlark), I thought it would be a fun activity to finally tally all of my lifers.

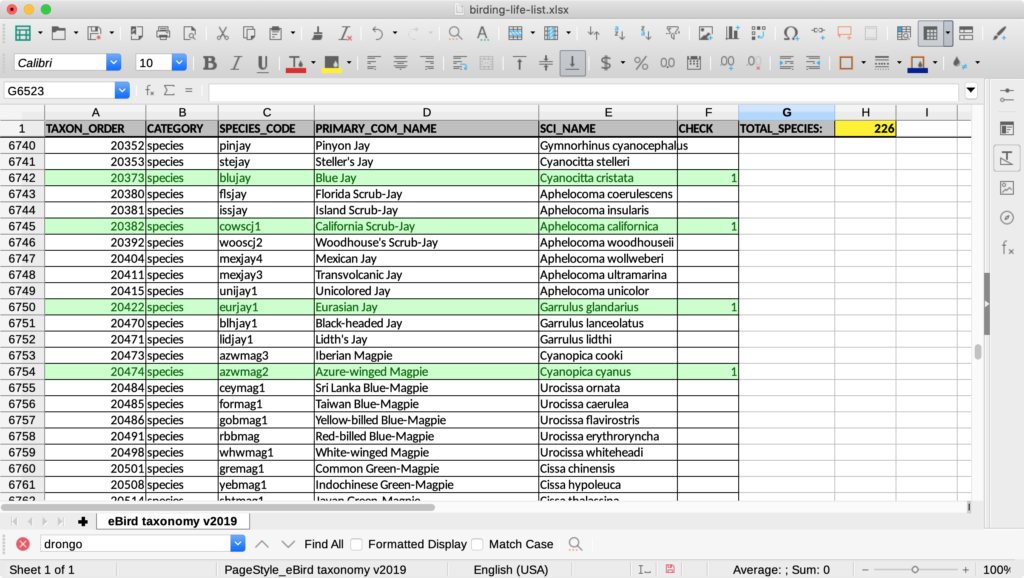

So I found the 2019 eBird Taxonomy Update, cleared out the duplicate / subspecies, and set up a spreadsheet that tallies and auto-highlights species by simply putting a ‘1’ in the ‘CHECK’ column.

Since this is a fun activity, I want to share the spreadsheet so you can start your own tally:

Download ‘birding-life-list.xlsx’

As of this post, I’m at 226 species! The last one I added was a Chestnut-sided Warbler. For me to add a species to the list, it has to meet these simple requirements:

- I have seen the bird myself.

- The bird was seen in the wild.

- I remember seeing it.

#3 is a little odd, but there are plenty of cases where we counted a bird on the Christmas Bird Count, but I can’t remember if I saw it, or if one of my companions did.

Anyway, hope this list is useful to you, and you find it an exciting quarantine activity like I have. And I’ll leave you with some of my bird pics: